Pseudo-Distributed Pattern Configuration

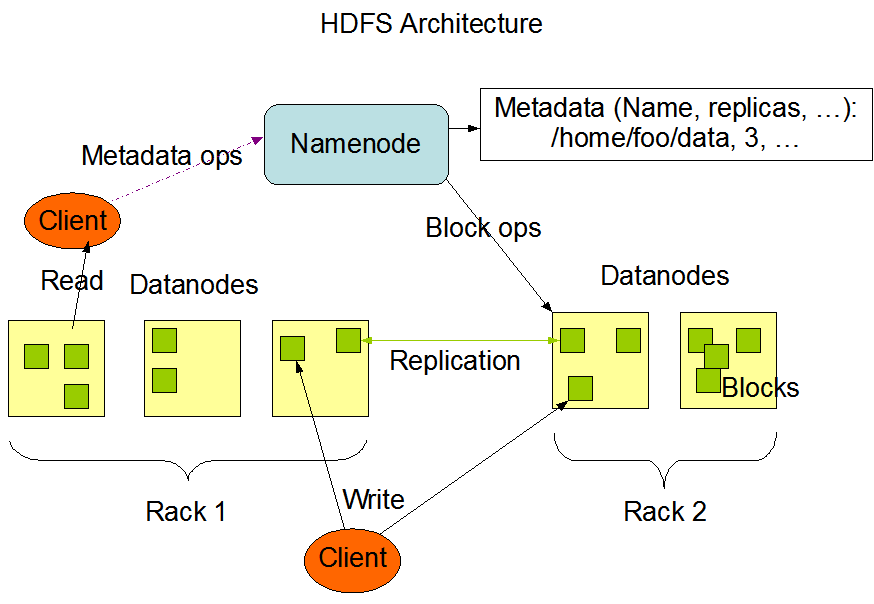

In most cases, Hadoop is used in a clustered environment, that is to say, we need to deploy Hadoop on multiple nodes. At the same time, Hadoop can also run on a single node in pseudo-distributed pattern, simulating multi-node scenarios through multiple independent Java processes. In the initial learning phase, there is no need to spend a lot of resources to create different nodes. So, this section and subsequent chapters will mainly use the pseudo-distributed pattern for Hadoop “cluster” deployment.

Create Directories

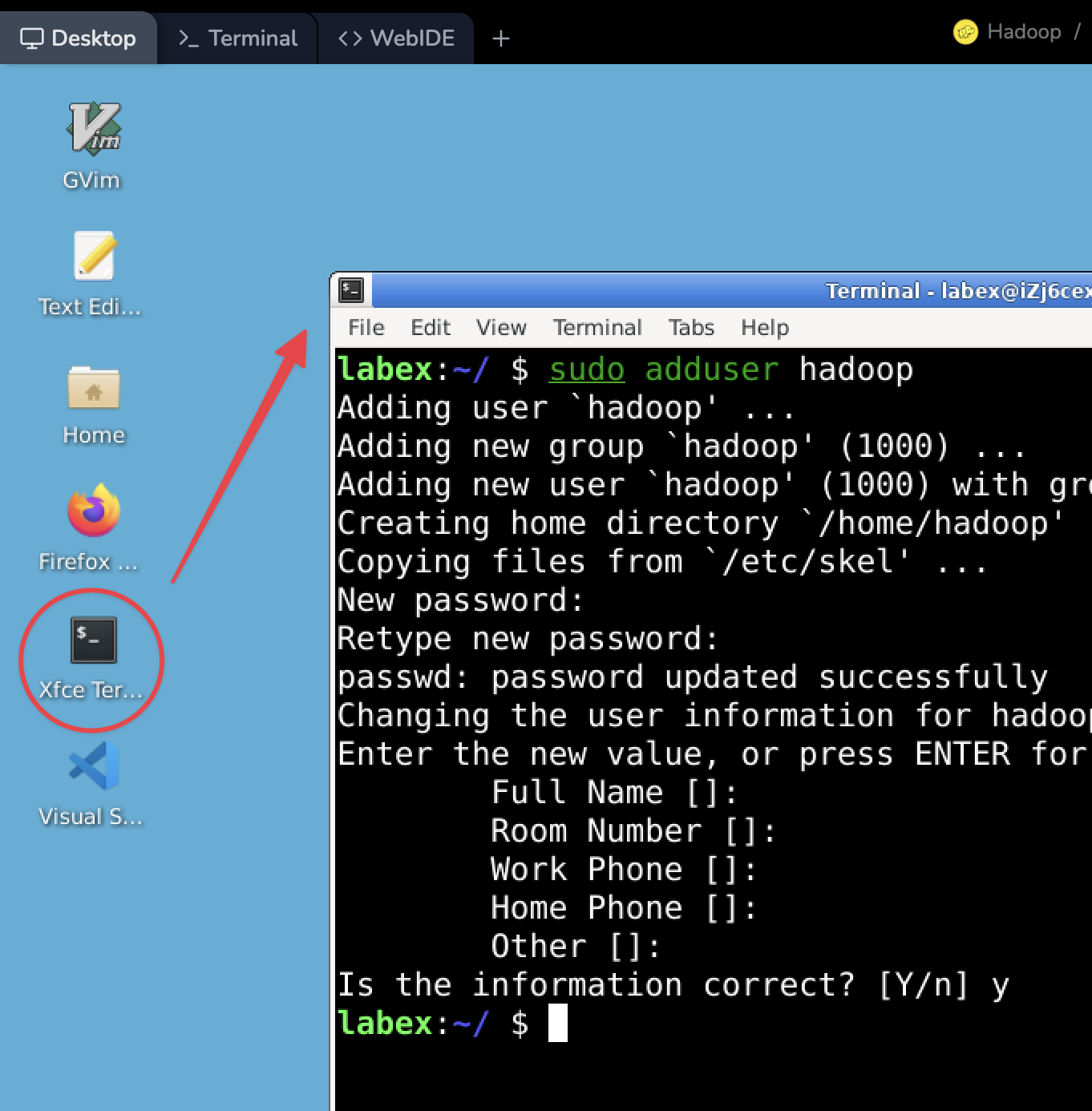

To begin, create the namenode and datanode directories within the Hadoop user's home directory. Execute the command below to create these directories:

rm -rf ~/hadoopdata

mkdir -p ~/hadoopdata/hdfs/{namenode,datanode}

Then, you need to modify the configuration files of Hadoop to make it run in pseudo-distributed pattern.

Edit core-site.xml

Open the core-site.xml file with a text editor in the terminal:

vim /home/hadoop/hadoop/etc/hadoop/core-site.xml

In the configuration file, modify the value of the configuration tag to the following content:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

The fs.defaultFS configuration item is used to indicate the location of the file system that the cluster uses by default:

Save the file and exit vim after editing.

Edit hdfs-site.xml

Open another configuration file hdfs-site.xml:

vim /home/hadoop/hadoop/etc/hadoop/hdfs-site.xml

In the configuration file, modify the value of the configuration tag to the following:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/datanode</value>

</property>

</configuration>

This configuration item is used to indicate the number of file copies in HDFS, which is 3 by default. Since we have deployed it in a pseudo-distributed manner on a single node, it is modified to 1:

Save the file and exit vim after editing.

Edit hadoop-env.sh

Next edit the hadoop-env.sh file:

vim /home/hadoop/hadoop/etc/hadoop/hadoop-env.sh

Change the value of JAVA_HOME to the actual location of the installed JDK, i.e., /usr/lib/jvm/java-11-openjdk-amd64.

Note: You can use the echo $JAVA_HOME command to check the actual location of the installed JDK.

Save the file and exit vim editor after editing.

Edit yarn-site.xml

Next edit the yarn-site.xml file:

vim /home/hadoop/hadoop/etc/hadoop/yarn-site.xml

Add the following to the configuration tag:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

Save the file and exit vim editor after editing.

Edit mapred-site.xml

Finally, you need to edit the mapred-site.xml file.

Open the file with vim editor:

vim /home/hadoop/hadoop/etc/hadoop/mapred-site.xml

Similarly, add the following to the configuration tag:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/home/hadoop/hadoop</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/home/hadoop/hadoop</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/home/hadoop/hadoop</value>

</property>

</configuration>

Save the file and exit vim editor after editing.